This article serves as a valuable introduction to master I/O related technologies, it spans all of aspects needed to be on your way to demystify advanced frameworks, in particular the popular framework Netty. You will be prepared to delve deeper into more advanced topics by learning what is going on “under the hood”.

You may find that the article is a bit too long, but it’s not, most sections are just recalls, the aim is to ease the understanding.

We’ll begin with background on high-performance networking. With this context in place, we’ll introduce Netty, its core concepts, and building blocks.

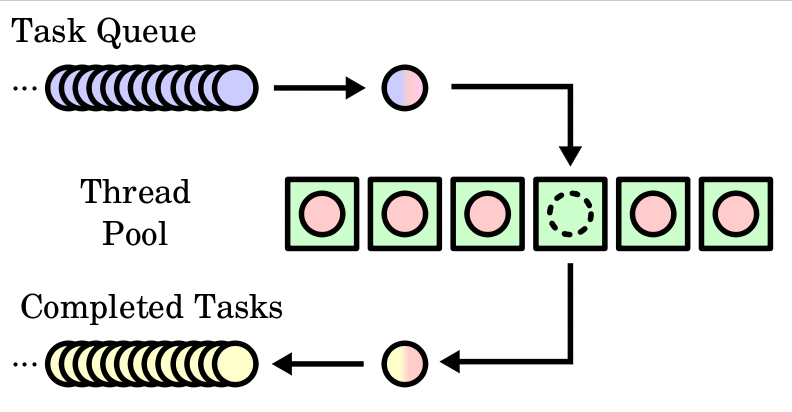

The Thread Pool pattern is a desing pattern used to execute multiple tasks through a fixed number of threads. The idea behind using a thread pool is to improve performance and throughput, in fact, creating a thread per request is totally infeasible for modern applications.

The idea is the following, given a task, it will be passed to the thread pool executor, it gets then pushed into a blocking queue and gets assigned to a given thread for execution. When a thread finishes it’s task, it requests another. If none are available, the thread can wait or terminate.

📖 Recommended reading: http://tutorials.jenkov.com/java-concurrency/thread-pools.html.

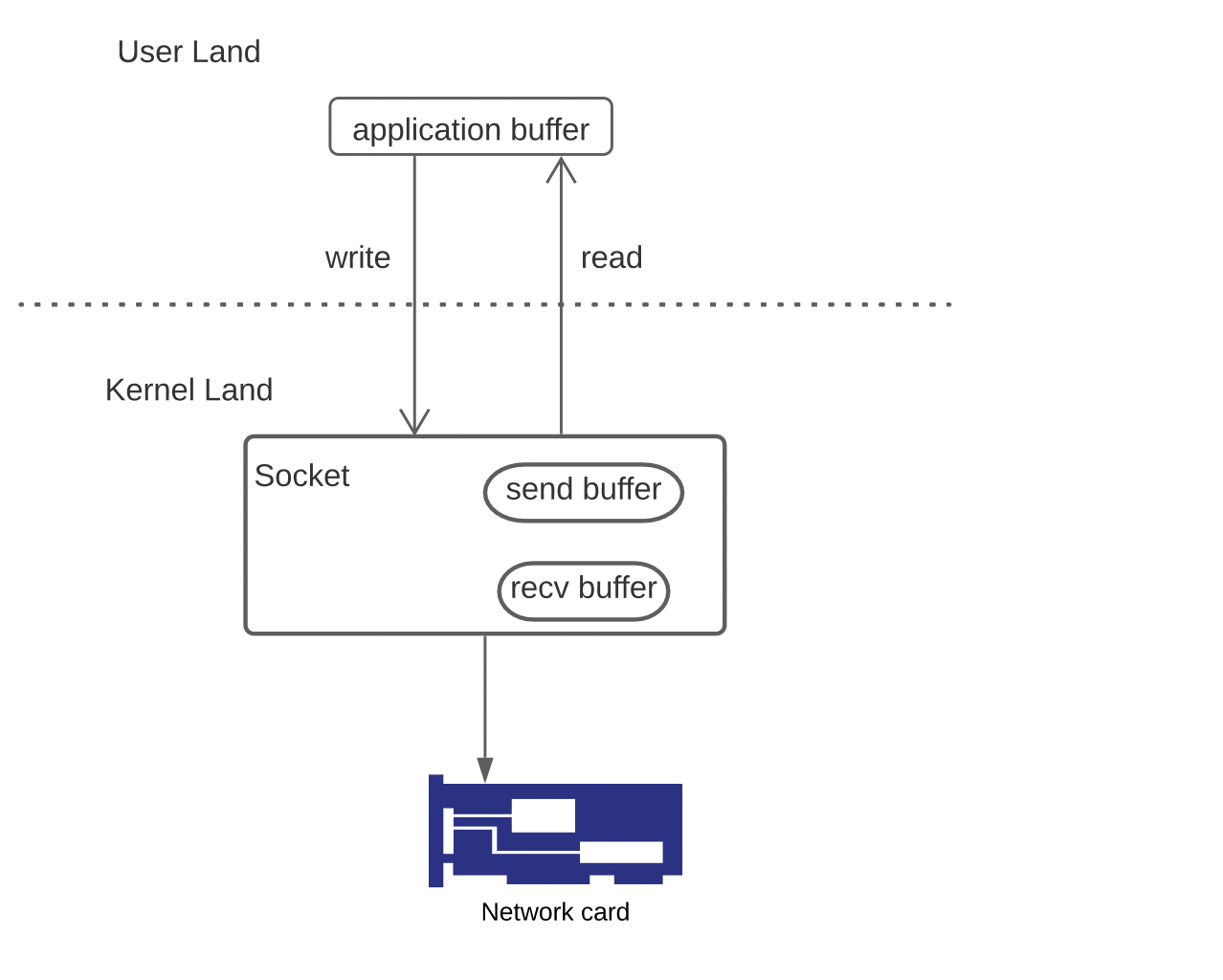

When the application process wants to write data to a remote peer, through write system call, the kernel copies data to a socket send buffer before transmitting it to the peer. When the kernel receives data, it copies it to the socket’s recv buffer. The data remains in the recv buffer until it gets consumed by the application process through read system call.

Lets take a look at some I/O models.

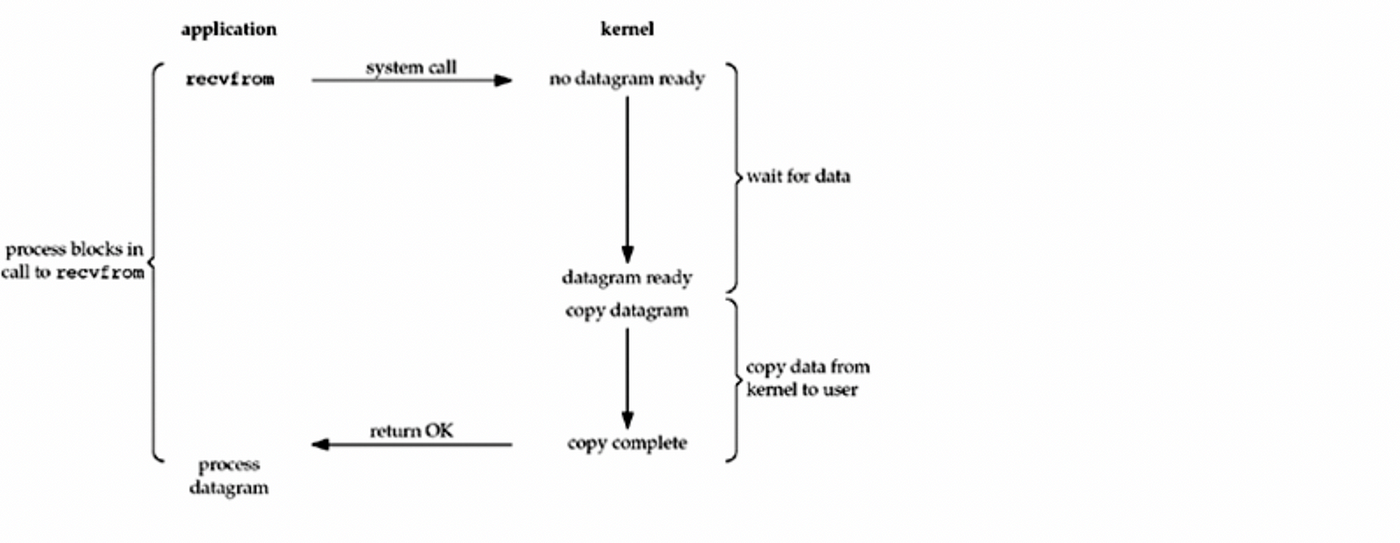

Blocking I/O Model is the default mode for all the sockets under Unix, the process initiates request by invoking recvfrom call, it blocks until data become available and copied from the kernel to the user buffer. In this mode, we are unable to process another descriptor that is ready by this time.

In contrast to Blocking I/O Model, we invoke recvfrom from time to time*,* if data is not immediately ready*,* EWOULDBLOCK error is returned, so we persist (loop) until data is ready, it is called polling. When EWOULDBLOCK is received, the user can decide to continue or to go do other things and come back to continue polling the kernel to see if some operation is ready. This is often a waste of CPU time.